|

|

||

|---|---|---|

| .github/workflows | ||

| agentpress | ||

| images | ||

| .env.example | ||

| .gitignore | ||

| CHANGELOG.md | ||

| LICENSE | ||

| MANIFEST.in | ||

| README.md | ||

| poetry.lock | ||

| pyproject.toml | ||

README.md

AgentPress: Building Blocks for AI Agents

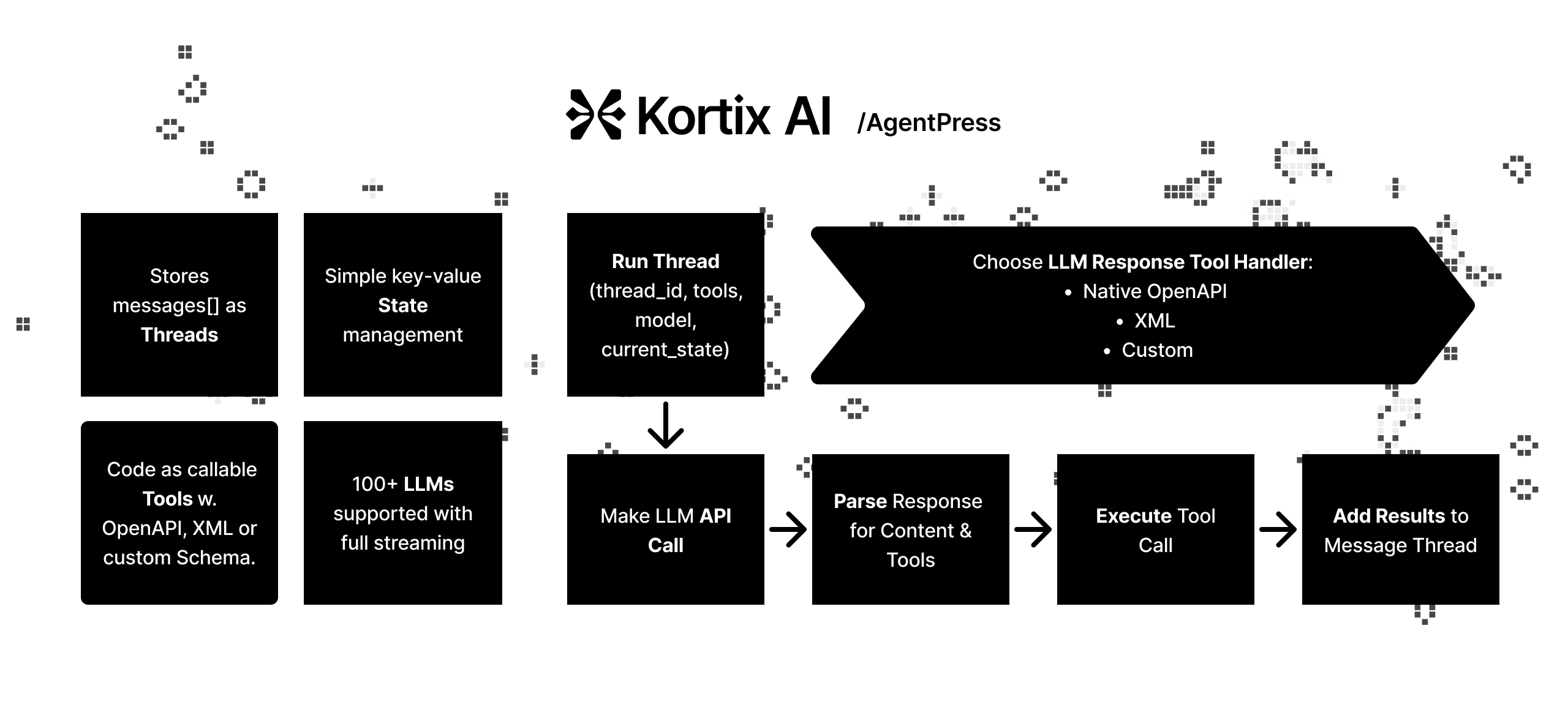

AgentPress is a collection of simple, but powerful utilities that serve as building blocks for creating AI agents. Plug, play, and customize.

See How It Works for an explanation of this flow.

Core Components

- Threads: Manage Messages[] as threads.

- Tools: Register code as callable tools with definitions in both OpenAPI and XML

- Response Processing: Support for native-LLM OpenAPI and XML-based tool calling

- State Management: Thread-safe JSON key-value state management

- LLM: +100 LLMs using the OpenAI I/O Format powered by LiteLLM

Installation & Setup

- Install the package:

pip install agentpress

- Initialize AgentPress in your project:

agentpress init

Creates a agentpress directory with all the core utilities.

Check out File Overview for explanations of the generated files.

- If you selected the example agent during initialization:

- Creates an

agent.pyfile with a web development agent example - Creates a

toolsdirectory with example tools:files_tool.py: File operations (create/update files, read directory and load into state)terminal_tool.py: Terminal command execution

- Creates a

workspacedirectory for the agent to work in

- Creates an

Quick Start

- Set up your environment variables in a

.envfile:

OPENAI_API_KEY=your_key_here

ANTHROPIC_API_KEY=your_key_here

GROQ_API_KEY=your_key_here

- Create a calculator tool with OpenAPI schema:

from agentpress.tool import Tool, ToolResult, openapi_schema

class CalculatorTool(Tool):

@openapi_schema({

"type": "function",

"function": {

"name": "add",

"description": "Add two numbers",

"parameters": {

"type": "object",

"properties": {

"a": {"type": "number"},

"b": {"type": "number"}

},

"required": ["a", "b"]

}

}

})

async def add(self, a: float, b: float) -> ToolResult:

try:

result = a + b

return self.success_response(f"The sum is {result}")

except Exception as e:

return self.fail_response(f"Failed to add numbers: {str(e)}")

- Or create a tool with XML schema:

from agentpress.tool import Tool, ToolResult, xml_schema

class FilesTool(Tool):

@xml_schema(

tag_name="create-file",

mappings=[

{"param_name": "file_path", "node_type": "attribute", "path": "."},

{"param_name": "file_contents", "node_type": "content", "path": "."}

],

example='''

<create-file file_path="path/to/file">

File contents go here

</create-file>

'''

)

async def create_file(self, file_path: str, file_contents: str) -> ToolResult:

# Implementation here

pass

- Use the Thread Manager with tool execution:

import asyncio

from agentpress.thread_manager import ThreadManager

from calculator_tool import CalculatorTool

async def main():

# Initialize thread manager and add tools

manager = ThreadManager()

manager.add_tool(CalculatorTool)

# Create a new thread

thread_id = await manager.create_thread()

# Add your message

await manager.add_message(thread_id, {

"role": "user",

"content": "What's 2 + 2?"

})

# Run with streaming and tool execution

response = await manager.run_thread(

thread_id=thread_id,

system_message={

"role": "system",

"content": "You are a helpful assistant with calculation abilities."

},

model_name="anthropic/claude-3-5-sonnet-latest",

execute_tools=True,

native_tool_calling=True, # Contrary to xml_tool_calling = True

parallel_tool_execution=True # Will execute tools in parallel, contrary to sequential (one after another)

)

asyncio.run(main())

- View conversation threads in a web UI:

streamlit run agentpress/thread_viewer_ui.py

How It Works

Each AI agent iteration follows a clear, modular flow:

-

Message & LLM Handling

- Messages are managed in threads via

ThreadManager - LLM API calls are made through a unified interface (

llm.py) - Supports streaming responses for real-time interaction

- Messages are managed in threads via

-

Response Processing

- LLM returns both content and tool calls

- Content is streamed in real-time

- Tool calls are parsed using either:

- Standard OpenAPI function calling

- XML-based tool definitions

- Custom parsers (extend

ToolParserBase)

-

Tool Execution

- Tools are executed either:

- In real-time during streaming (

execute_tools_on_stream) - After complete response

- In parallel or sequential order

- In real-time during streaming (

- Supports both standard and XML tool formats

- Extensible through

ToolExecutorBase

- Tools are executed either:

-

Results Management

- Results from both content and tool executions are handled

- Supports different result formats (standard/XML)

- Customizable through

ResultsAdderBase

This modular architecture allows you to:

- Use standard OpenAPI function calling

- Switch to XML-based tool definitions

- Create custom processors by extending base classes

- Mix and match different approaches

File Overview

Core Components

agentpress/llm.py

LLM API interface using LiteLLM. Supports 100+ LLMs with OpenAI-compatible format. Includes streaming, retry logic, and error handling.

agentpress/thread_manager.py

Manages conversation threads with support for:

- Message history management

- Tool registration and execution

- Streaming responses

- Both OpenAPI and XML tool calling patterns

agentpress/tool.py

Base infrastructure for tools with:

- OpenAPI schema decorator for standard function calling

- XML schema decorator for XML-based tool calls

- Standardized ToolResult responses

agentpress/tool_registry.py

Central registry for tool management:

- Registers both OpenAPI and XML tools

- Maintains tool schemas and implementations

- Provides tool lookup and validation

agentpress/state_manager.py

Thread-safe state persistence:

- JSON-based key-value storage

- Atomic operations with locking

- Automatic file handling

Response Processing

agentpress/llm_response_processor.py

Handles LLM response processing with support for:

- Streaming and complete responses

- Tool call extraction and execution

- Result formatting and message management

Standard Processing

standard_tool_parser.py: Parses OpenAPI function callsstandard_tool_executor.py: Executes standard tool callsstandard_results_adder.py: Manages standard results

XML Processing

xml_tool_parser.py: Parses XML-formatted tool callsxml_tool_executor.py: Executes XML tool callsxml_results_adder.py: Manages XML results

Philosophy

- Plug & Play: Start with our defaults, then customize to your needs.

- Agnostic: Built on LiteLLM, supporting any LLM provider. Minimal opinions, maximum flexibility.

- Simplicity: Clean, readable code that's easy to understand and modify.

- No Lock-in: Take full ownership of the code. Copy what you need directly into your codebase.

Contributing

We welcome contributions! Feel free to:

- Submit issues for bugs or suggestions

- Fork the repository and send pull requests

- Share how you've used AgentPress in your projects

Development

- Clone:

git clone https://github.com/kortix-ai/agentpress

cd agentpress

- Install dependencies:

pip install poetry

poetry install

- For quick testing:

pip install -e .

License

Built with ❤️ by Kortix AI Corp